Genome Editing: A 50-Year Quest

The long struggle that lead to CRISPR.

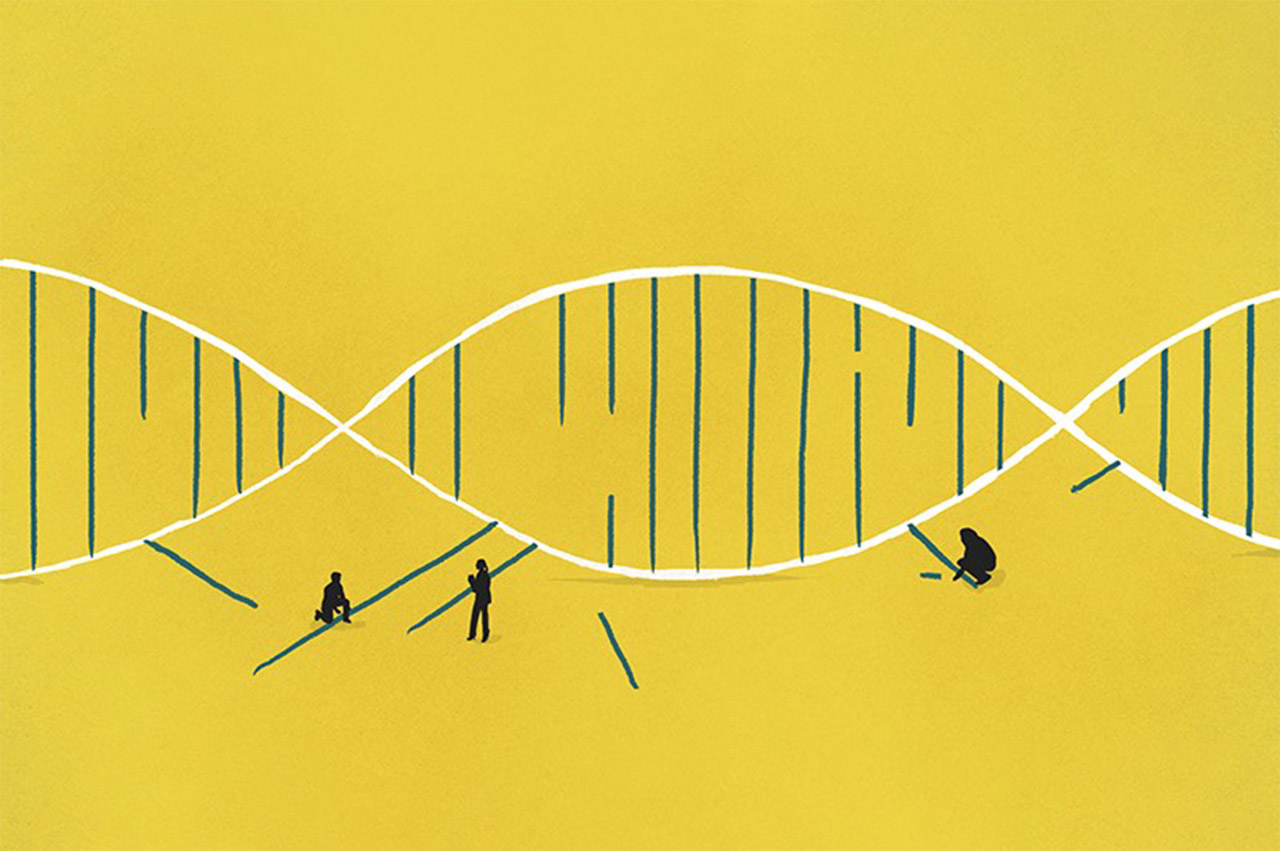

Scientists began searching for ways to edit genomes in the 1960s. Working in test tubes, researchers at UCSF and Stanford bombarded DNA with various combinations of molecular widgets, all borrowed from bacteria. Some of these widgets slice apart DNA bases like miniature scythes; others fasten them together like glue.

Herbert Boyer, PhD

In 1972, after a few years of trial and error – much of which took place in the UCSF laboratory of Herbert Boyer, PhD, then an assistant professor who would go on to co-found the biotechnology giant Genentech – the researchers eventually landed on a recipe for cutting and pasting DNA. For the first time, it was possible to mix and match genes to create hybrid sequences – called recombinant DNA – that had never before existed.

You could, let’s say, take a virus like HIV, delete the genes that make it virulent, and splice in a gene from a human cell. You could then unleash copies of your recombinant virus in the cells of a patient with a diseased copy of this gene. The viruses would naturally insert the new gene into the cells’ DNA, where it could compensate for its native, mutant twin and alleviate the disease’s symptoms.

This scenario is the basis of gene therapy – imbuing cells with healthy genes to make up for sick ones. It’s a promising approach. First tried in 1989, gene therapy progressed in fits and starts, plagued by unexpected setbacks – most notably the death of a patient in 1999. Those early setbacks, however, have been largely worked out, and “gene therapy 2.0” is now being tested in hundreds of clinical trials across the U.S., including several at UCSF clinics to treat sickle cell disease, beta thalassemia (a rare blood disorder), severe combined immunodeficiency syndrome (sometimes called “bubble boy disease”), and Parkinson’s disease.

Still, the technology has its drawbacks. It’s really more of a patch kit than a repair shop, and an imperfect one at that. Because gene therapy adds a new gene at an unpredictable spot in a cell’s genome, the gene’s fate isn’t a sure thing. Genes, after all, don’t work in isolation. They lie amid various DNA segments called regulatory DNA, which tell the cell how to read the code, much like notations on a music score. Consequently, a therapy gene – randomly inserted into the genome by a virus – might land near regulatory DNA that silences it, rendering it useless. Worse, it might disrupt a healthy gene or turn on a gene that causes cancer.

In the early 2000s, scientists went searching for tools they could better control. By cobbling together parts of natural proteins, they found they could synthesize artificial proteins able to target mutations at desired locations in a genome. One of the more capable creations, called a zinc-finger nuclease (ZFN), has already made its way into clinical trials. The first test in a human patient was led by Paul Harmatz, MD, at UCSF Benioff Children’s Hospital Oakland – in partnership with Richmond, Calif.-based Sangamo Therapeutics – in 2017.

Engineering proteins, however, is no small feat. It takes months or even years to adapt a ZFN to target just one of the many thousands of known disease-causing mutations. The process is simply too time-consuming and costly to be of practical use in treating the vast majority of genetic diseases.

For nearly a decade, researchers struggled to find a better way – until, in 2012, CRISPR came along.